|

Balluff - BVS CA-GX0 / BVS CA-GX2 Technical Documentation

|

Nowadays computer architectures usually incorporate multiple CPU cores inside a single chip or even multiple physical CPUs on a motherboard. This is why modern operating systems, SDKs used for building applications and applications themselves often use multiple threads or processes to make good use of this hardware. This is done to spread the CPU load caused by the applications equally across all the available processors to achieve optimal performance. Optimal performance can mean a couple of things but usually it boils down to get certain results faster than these could be generated by a single thread or to keep a graphical user interface responsive while certain tasks are being performed in the background.

With GigE Vision™ devices streaming at bandwidths of around 5 GBit/s or higher this sometimes becomes a problem! Especially maintaining a stable data reception without losing individual images or parts of images while performing extensive number crunching in parallel might not always work as reliable as expected.

This comes due to the fact that usually a network connection is always processed on a certain CPU core. Assuming that there are a lot of parallel network connections and all these network connections have been assigned to a certain CPU and also that each individual network connection does not add significantly to the overall bandwidth consumed this makes sense: Usually an incoming network packet will generate an interrupt sooner or later (see interrupt moderation about that sooner or later). When this interrupt is always generated on the same CPU core this saves some time since register content etc. does not need to be transferred to a different core without generating any value while doing so and maybe more important the number of cache misses when accessing RAM is reduced. So modern operating system and network card driver developers have spent quite some development effort NOT to switch CPUs unless necessary.

This works well and improves system performance when the previous assumption is true, i.e. when dealing with a lot or at least multiple parallel connections that all carry more or less a similar amount of data per time slot. Now with GigE Vision™ devices this is usually not the case. Quite the opposite is true: Usually a single network stream carries all, or at least a significant share, of the data that is received by a NIC in the system. This results in some CPU cores being burdened with more work than others.

For one 1 GBit/s connections in modern hardware this usually is negligible since the optimized NIC drivers and GigE Vision™ capture filter drivers combined with a powerful CPU are able to cope with the incoming data easily. Also multiple 1 GBit/s streams usually can be dealt with without running into trouble since the NIC driver will try to bind each connection/stream to a different CPU core at least in a pseudo-random fashion so that chances are good that all the work will be equally distributed. Sometimes (when complete control is needed/wanted) this randomness is not desirable and then continue to read this section makes sense for users of 1-2 GBit/s cameras. In any case 5 GBit/s or higher bandwidths coming from a single device via a single connection are much more challenging even for current CPU architectures:

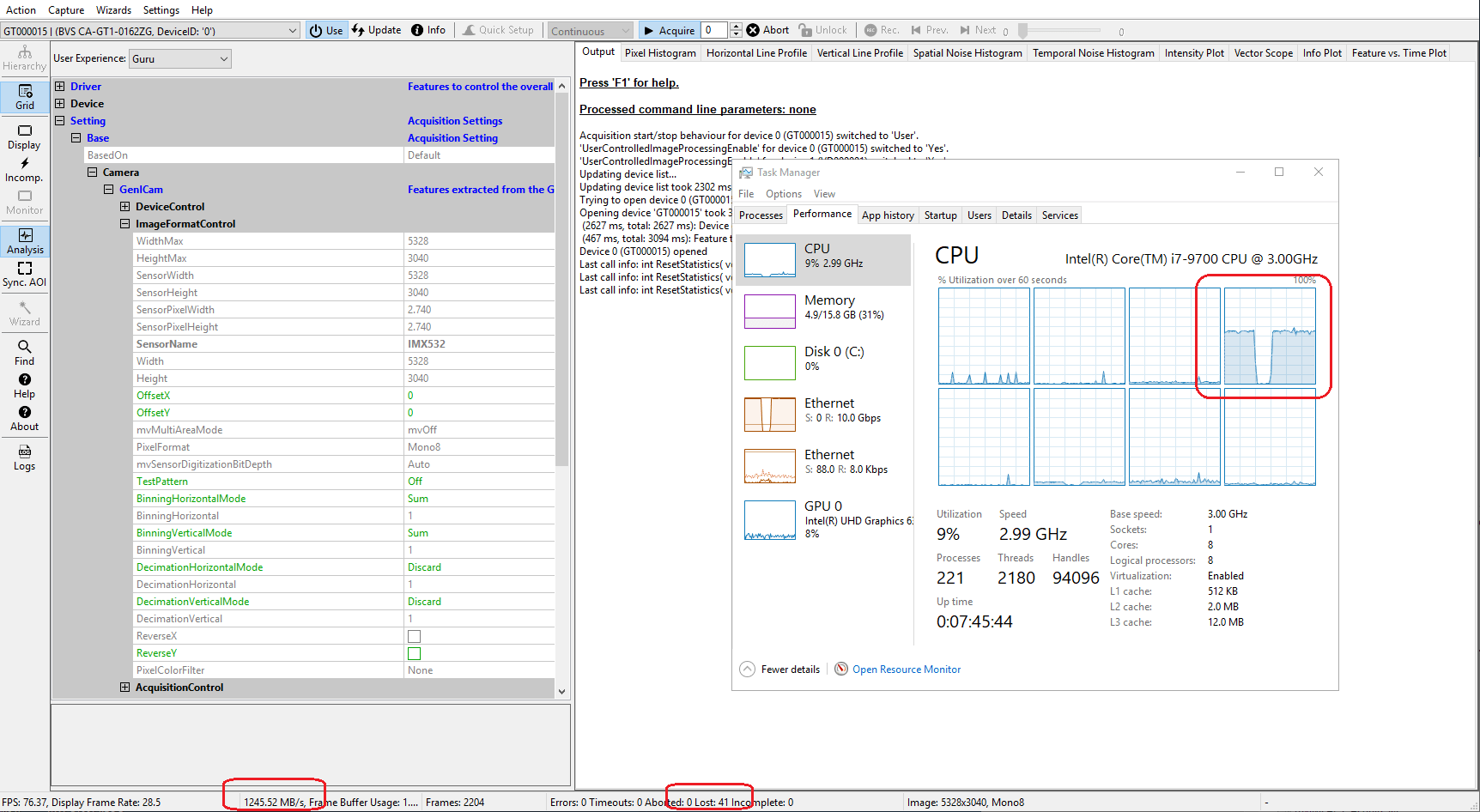

In the above image it can be seen that the CPU load caused by a 10 GigE device can no longer be ignored! The important thing to keep in mind is also, that the CPU actually processing the data cannot be known upfront. It is quite possible that it is a different one each time the acquisition is started! This depends on various things like

Now when adding a time consuming parallel algorithm to the system it usually can be observed that the operating system will assign equal chunks of work to EVERY CPU core in the system. Even to those processing the incoming network data. This can overload the CPU core which is quite busy already dealing with image data and can result in lost data. Even if the core is not constantly overloaded temporary problems may arise simply from the fact that every now and then the processor must perform a short time-slice of work that is not GigE Vision™ specific. Since data is arriving in the NIC very fast and temporary buffers available to the NIC driver are usually small (see Optimizing Network Performance for fine-tuning) switching to another task might consume just enough time to let internal buffers overflow.

With the Balluff Multi-Core Acquisition Optimizer two things can be achieved that can result in an improved overall system stability:

The Balluff Multi-Core Acquisition Optimizer makes use of modern NICs RSS (Receive Side Scaling) features and therefore requires a RSS capable network interface card to operate. Most of today's NICs have this feature however the quality of the implementation and the amount of features that can be controlled may vary across manufacturers and models.

So far good results have been achieved with the following products:

Acceptable results have been achieved with: