Introduction

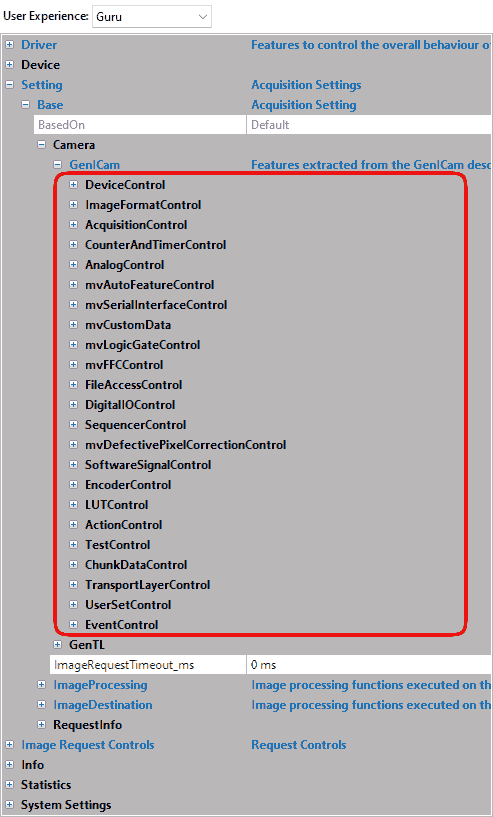

For new applications or to set the device via ImpactControlCenter we recommend to use the GenICam interface layout as it allows the most flexible access to the device features.

After you've set the interface layout to GenICam (either programmed or using ImpactControlCenter), all GenICam controls of the device are available.

- Note

- It depends on the device, which controls are supported. To clarify if your device supports a specific control or property, you can use the interactive "Product Comparison" chapter. Just select the wanted property and click on the button "Rebuild Comparison Table".

In ImpactControlCenter, you can see them in "Setting → Base → Camera → GenICam":

As you can see, there are some controls with and without the prefix "mv".

- "mv" prefix features are unique non-standard features developed by Balluff/MATRIX VISION.

Without "mv" are standard features as known from the Standard Features Naming Convention of GenICam™ properties (SFNC).

All those features are "camera based / device based" features which can also be accessed using the camera with other GenICam / GigE Vision compliant third-party software.

- Note

- In GigE Vision™ timestamps are denoted in "device ticks" but for Balluff/MATRIX VISION devices this equals microseconds.

- Do not mix up the camera based / device based features with the features available in "Setting → Base → Image Processing". Theses features control the Impact Acquire software stack and therefore will introduce additional CPU load.

Device Control

The "Device Control" contains the features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| DeviceType | deviceType | Returns the device type. |

| DeviceScanType | deviceScanType | Scan type of the sensor of the device. |

| DeviceVendorName | deviceVendorName | Name of the manufacturer of the device. |

| DeviceModelName | deviceModelName | Name of the device model. |

| DeviceManufacturerInfo | deviceManufacturerInfo | Manufacturer information about the device. |

| DeviceVersion | deviceVersion | Version of the device. |

| DeviceFirmwareVersion | deviceFirmwareVersion | Firmware version of the device. |

| DeviceSerialNumber | deviceSerialNumber | Serial number of the device. |

| DeviceUserID | deviceUserID | User-programmable device identifier. |

| DeviceTLVersionMajor | deviceTLVersionMajor | Major version of the transport layer of the device. |

| DeviceTLVersionMinor | deviceTLVersionMinor | Minor version of the transport layer of the device. |

| DeviceLinkSpeed | deviceLinkSpeed | Indicates the speed of transmission negotiated on the specified Link. |

| DeviceTemperature | deviceTemperature | Device temperature. |

| etc. |

related to the device and its sensor.

This control provides a bandwidth control feature . You have to select DeviceLinkThroughputLimit. Here you can set the maximum bandwidth in KBps.

Additionally, Balluff offers two temperature sensors and for this special features

- device temperature upper limit (mvDeviceTemperatureUpperLimit)

- device temperature lower limit (mvDeviceTemperatureLowerLimit)

- device temperature limit hysteresis (mvDeviceTemperatureLimitHysteresis)

The use case Working with the temperature sensors shows how you can work with the temperature sensors.

Balluff offers also some information properties about the

- FPGA

- mvDeviceFPGAVersion

- and the image sensor

- mvDeviceSensorColorMode

A further use case related to the "Device Control" is:

Image Format Control

The "Image Format Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| SensorWidth | sensorWidth | Effective width of the sensor in pixels. |

| SensorHeight | sensorHeight | Effective height of the sensor in pixels. |

| SensorName | sensorName | Name of the sensor. |

| Width | width | Width of the image provided by the device (in pixels). |

| Height | height | Height of the image provided by the device (in pixels). |

| BinningHorizontal, BinningVertical | binningHorizontal, binningVertical | Number of horizontal/vertical photo-sensitive cells to combine together. |

| DecimationHorizontal, DecimationVertical | decimationHorizontal, decimationVertical | Sub-sampling of the image. This reduces the resolution (width) of the image by the specified decimation factor. |

| TestPattern | testPattern | Selects the type of test image that is sent by the device. |

| etc. |

related to the format of the transmitted image.

With TestPattern, for example, you can select the type of test image that is sent by the device. Here two special types are available:

- mvBayerRaw (the Bayer mosaic raw image)

- mvFFCImage (the flat-field correction image)

Additionally, Balluff offers numerous additional features like:

- mvMultiAreaMode

which can be used to define multiple AOIs (Areas of Interests) in one image.- See also

- The use case Working with multiple AOIs (mv Multi Area Mode) shows how this feature works.

Acquisition Control

The "Acquisition Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| AcquisitionMode | acquisitionMode | Sets the acquisition mode of the device. The different modes configures a device to send

and can be used for asynchronously grabbing and sending image(s). It works with internal and external hardware trigger where the edge is selectable. The external trigger uses ImageRequestTimeout (ms) to time out.

|

| AcquisitionStart | acquisitionStart | Starts the acquisition of the device. |

| AcquisitionStop | acquisitionStop | Stops the acquisition of the device at the end of the current Frame. |

| AcquisitionAbort | acquisitionAbort | Aborts the acquisition immediately. |

| AcquisitionFrameRate | acquisitionFrameRate | Controls the acquisition rate (in Hertz) at which the frames are captured. Some cameras support a special internal trigger mode that allows more exact frame rates. This feature keeps the frame rate constant to an accuracy of +/-0.005 fps at 200 fps. This is achieved using frames with a length difference of up to 1 us. Please check in the sensor summary if this feature exists for the requested sensor. |

| TriggerSelector | triggerSelector | Selects the type of trigger to configure. A possible option is mvTimestampReset. The use case about mvTimestampReset is available. |

| TriggerOverlap[TriggerSelector] | triggerOverlap | Specifies the type trigger overlap permitted with the previous frame. TriggerOverlap is only intended for external trigger (which is usually non-overlapped: i.e. exposure and readout are sequentially). This leads to minimal latency / jitter between trigger and exposure. Maximum frame rate in triggered mode = frame rate of continuous mode This however leads to higher latency / jitter between trigger and exposure. A trigger will be not latched if it occurs before this moment (trigger is accurate in time). |

| ExposureMode | exposureMode | Sets the operation mode of the exposure (or shutter). |

| ExposureTime | exposureTime | Sets the exposure time (in microseconds) when ExposureMode is Timed and ExposureAuto is Off. |

| ExposureAuto | exposureAuto | Sets the automatic exposure mode when ExposureMode is Timed. |

| etc. |

related to the image acquisition, including the triggering mode.

Additionally, Balluff offers numerous additional features like:

- mvShutterMode

which selects the shutter mode of the CMOS sensors like rolling shutter or global shutter. - mvDefectivePixelEnable

which activates the sensor's defective pixel correction. - mvExposureAutoSpeed

which determines the increment or decrement size of exposure value from frame to frame. - mvExposureAutoDelayImages

the number of frames that the AEC must skip before updating the exposure register. - mvExposureAutoAverageGrey

common desired average grey value (in percent) used for Auto Gain Control (AGC) and Auto Exposure Control (AEC). - mvExposureAutoAOIMode

common AutoControl AOI used for Auto Gain Control (AGC), Auto Exposure Control (AEC) and Auto White Balance (AWB). - mvAcquisitionMemoryMode

Balluff offers three additional acquisition modes which use the internal memory of the camera:- mvRecord

which is used to store frames in memory. - mvPlayback

which transfers stored frames. - mvPretrigger

which stores frames in memory to be transferred after trigger.

To define the number of frames to acquire before the occurrence of an AcquisitionStart or AcquisitionActive trigger, you can use mvPretriggerFrameCount.- See also

- The use case Recording sequences with pre-trigger shows how this feature works.

- mvRecord

- mvAcquisitionMemoryMaxFrameCount

which shows the maximum of frames the internal memory can save.- See also

- The use case Working with burst mode buffer lists some maximum frame counts of some camera models.

- mvSmearReduction

smear reduction in triggered and non-overlapped mode. - mvSmartFrameRecallEnable

which configures the internal memory to store each frame (thats gets transmitted to the host) in full resolution.- See also

- The use case SmartFrameRecall shows how this feature works.

- Since

- FW Revision 2.40.2546.0

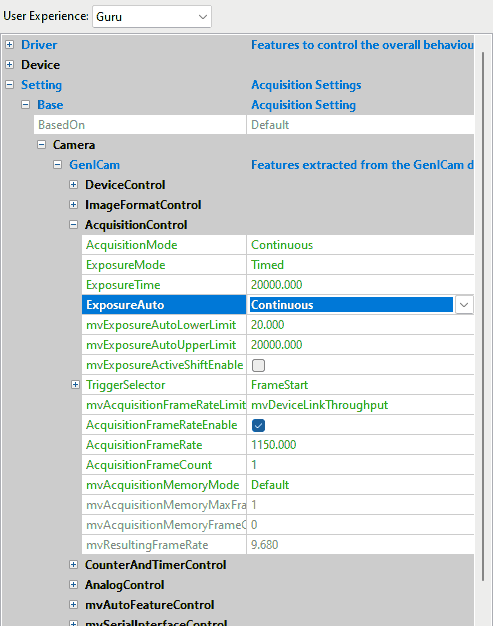

For "Exposure Auto Mode", in ImpactControlCenter just select in "Exposure Auto" "Continuous". Afterwards, you have the possibility to set lower and upper limit, average gray combined with AOI setting:

- mvSmartFrameRecallFrameSkipRatio

When set to a value != 0, the smaller frames get thinned out. AOI requests can still be done for all frames. mvSmartFrameRecallTimestampLookupAccuracy

is needed for the SkipRatio feature since you don't know the timestamps of the internal frames. This value defines the strictness of the timestamp-check for the recalled image (given in us).

Counter And Timer Control

The "Counter And Timer Control" is a powerful feature which Balluff/MATRIX VISION customers already know under the name Hardware Real-Time Controller (HRTC). Balluff/MATRIX VISION cameras provide:

- 4 counters for counting events or external signals (compare number of triggers vs. number of frames; overtrigger) and

- 2 timers.

Counter and Timers can be used, for example,

- for pulse width modulation (PWM) and

- to generate output signals of variable length, depending on conditions in camera.

This achieves complete HRTC functionality which supports following applications:

- frame rate by timer

- exposure time by timer

- pulse width at input

The "Counter And Timer Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| CounterSelector | counterSelector | Selects which counter to configure. |

| CounterEventSource[CounterSelector] | counterEventSource | Selects the events that will be the source to increment the counter. |

| CounterEventActivation[CounterSelector] | counterEventActivation | Selects the activation mode event source signal. |

| etc. | ||

| TimerSelector | timerSelector | Selects which timer to configure. |

| TimerDuration[TimerSelector] | timerDuration | Sets the duration (in microseconds) of the timer pulse. |

| TimerDelay[TimerSelector] | timerDelay | Sets the duration (in microseconds) of the delay. |

| etc. |

related to the usage of programmable counters and timers.

Because there are many ways to use this feature, the list of use cases is long and not finished yet:

- Processing triggers from an incremental encoder

- Creating different exposure times for consecutive images

- Creating synchronized acquisitions

- Generating a pulse width modulation (PWM)

- Outputting a pulse at every other external trigger

- Generating very long exposure times

Analog Control

The "Analog Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| GainSelector | gainSelector | Selects which gain is controlled by the various gain features. |

| Gain[GainSelector] | gain | Controls the selected gain as an absolute physical value [in dB]. |

| GainAuto[GainSelector] | gainAuto | Sets the automatic gain control (AGC) mode. |

| GainAutoBalance | gainAutoBalance | Sets the mode for automatic gain balancing between the sensor color channels or taps. |

| BlackLevelSelector | blackLevelSelector | Selects which black level is controlled by the various BlackLevel features. |

| BlackLevel[BlackLevelSelector] | blackLevel | Controls the selected BlackLevel as an absolute physical value. |

| BalanceWhiteAuto | balanceWhiteAuto | Controls the mode for automatic white balancing between the color channels. |

| Gamma | gamma | Controls the gamma correction of pixel intensity. |

| etc. |

related to the video signal conditioning in the analog domain.

Additionally, Balluff offers:

- mvBalanceWhiteAuto functions and

- mvGainAuto functions.

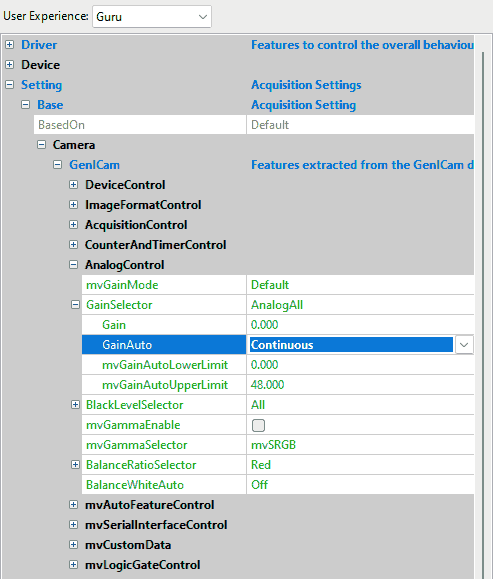

In ImpactControlCenter just select in "Gain Auto" (AGC) "Continuous". Afterwards, you have the possibility to set minimum and maximum limit combined with AOI setting:

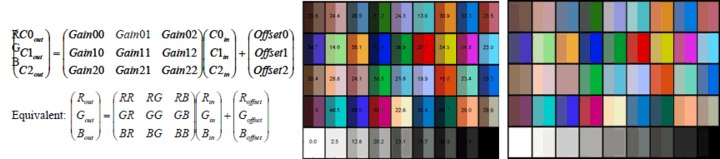

Color Transformation Control

The "Color Transformation Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| ColorTransformationSelector | colorTransformationEnable | Activates the selected color transformation module. |

| ColorTransformationSelector | colorTransformationSelector | Selects which color transformation module is controlled by the various color transformation features. |

| ColorTransformationValue | colorTransformationValue | Represents the value of the selected Gain factor or Offset inside the transformation matrix. |

| ColorTransformationValueSelector | colorTransformationValueSelector | Selects the gain factor or Offset of the Transformation matrix to access in the selected color transformation module. |

related to the control of the color transformation.

This control offers an enhanced color processing for optimum color fidelity using a color correction matrix (CCM) and enables

- 9 coefficients values (Gain 00 .. Gain 22) and

- 3 offset values (Offset 0 .. Offset 2)

to be entered for RGBIN → RGBOUT transformation. This can be used to optimize specific colors or specific color temperatures.

Coefficients will be made available for sensor models and special requirements on demand.

Event Control

The "Event Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| EventSelector | eventSelector | Selects which Event to signal to the host application. |

| EventNotification[EventSelector] | eventNotification | Activate or deactivate the notification to the host application of the occurrence of the selected Event. |

| EventFrameTriggerData | eventFrameTriggerData | Category that contains all the data features related to the FrameTrigger event. |

| EventFrameTrigger | eventFrameTrigger | Returns the unique identifier of the FrameTrigger type of event. |

| EventFrameTriggerTimestamp | eventFrameTriggerTimestamp | Returns the timestamp of the AcquisitionTrigger event. |

| EventFrameTriggerFrameID | eventFrameTriggerFrameID | Returns the unique identifier of the frame (or image) that generated the FrameTrigger event. |

| EventExposureEndData | eventExposureEndData | Category that contains all the data features related to the ExposureEnd event. |

| EventExposureEnd | eventExposureEnd | Returns the unique identifier of the ExposureEnd type of event. |

| EventExposureEndTimestamp | eventExposureEndTimestamp | Returns the timestamp of the ExposureEnd Event. |

| EventExposureEndFrameID | eventExposureEndFrameID | Returns the unique identifier of the frame (or image) that generated the ExposureEnd event. |

| EventErrorData | eventErrorData | Category that contains all the data features related to the error event. |

| EventError | eventError | Returns the unique identifier of the error type of event. |

| EventErrorTimestamp | eventErrorTimestamp | Returns the timestamp of the error event. |

| EventErrorFrameID | eventErrorFrameID | If applicable, returns the unique identifier of the frame (or image) that generated the error event. |

| EventErrorCode | eventErrorCode | Returns an error code for the error(s) that happened. |

| etc. |

related to the generation of Event notifications by the device.

The use case Working with Event Control shows how this control can be used.

Chunk Data Control

The "Chunk Data Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| ChunkModeActive | chunkModeActive | Activates the inclusion of chunk data in the payload of the image. |

| ChunkSelector | chunkSelector | Selects which chunk to enable or control. |

| ChunkEnable[ChunkSelector] | chunkEnable | Enables the inclusion of the selected chunk data in the payload of the image. |

| ChunkImage | chunkImage | Returns the entire image data included in the payload. |

| ChunkOffsetX | ChunkOffsetX | Returns the offset x of the image included in the payload. |

| ChunkOffsetY | chunkOffsetY | Returns the offset y of the image included in the payload. |

| ChunkWidth | chunkWidth | Returns the width of the image included in the payload. |

| ChunkHeight | chunkHeight | Returns the height of the image included in the payload. |

| ChunkPixelFormat | chunkPixelFormat | Returns the pixel format of the image included in the payload. |

| ChunkTimestamp | chunkTimestamp | Returns the timestamp of the image included in the payload at the time of the FrameStart internal event. |

| etc. |

related to the Chunk Data Control.

A description can be found in the image acquisition section of the Impact Acquire API manuals.

File Access Control

The "File Access Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| FileSelector | fileSelector | Selects the target file in the device. |

| FileOperationSelector[FileSelector] | fileOperationSelector | Selects the target operation for the selected file in the device. |

| FileOperationExecute[FileSelector][FileOperationSelector] | fileOperationExecute | Executes the operation selected by FileOperationSelector on the selected file. |

| FileOpenMode[FileSelector] | fileOpenMode | Selects the access mode in which a file is opened in the device. |

| FileAccessBuffer | fileAccessBuffer | Defines the intermediate access buffer that allows the exchange. |

| etc. |

related to the File Access Control that provides all the services necessary for generic file access of a device.

The use case Working with the UserFile section (Flash memory) shows how this control can be used.

Digital I/O Control

The "Digital I/O Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| LineSelector | lineSelector | Selects the physical line (or pin) of the external device connector to configure. |

| LineMode[LineSelector] | lineMode | Controls if the physical Line is used to Input or Output a signal. |

| UserOutputSelector | userOutputSelector | Selects which bit of the user output register will be set by UserOutputValue. |

| UserOutputValue[UserOutputSelector] | userOutputValue | Sets the value of the bit selected by UserOutputSelector. |

| etc. |

related to the control of the general input and output pins of the device.

Additionally, Balluff offers:

- mvLineDebounceTimeRisingEdge and

- mvLineDebounceTimeFallingEdge functionality.

A description of these functions can be found in the use case Creating a debouncing filter at the inputs.

How you can test the digital inputs and outputs is described in "Testing The Digital Inputs" in the "Impact Acquire SDK GUI Applications" manual. The use case Creating synchronized acquisitions is a further example which shows you how to work with digital inputs and outputs.

Encoder Control

The "Encoder Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| EncoderSourceA | encoderSourceA | Selection of the A input line. |

| EncoderSourceB | encoderSourceB | Selection of the B input line. |

| EncoderMode [FourPhase] | encoderMode | The counter increments or decrements 1 for every full quadrature cycle. |

| EncoderDivider | encoderDivider | Sets how many Encoder increment/decrements that are needed generate an encoder output signal. |

| EncoderOutputMode | encoderOutputMode | Output signals are generated at all new positions in one direction. If the encoder reverses no output pulse are generated until it has again passed the position where the reversal started. |

| EncoderValue | encoderValue | Reads or writes the current value of the position counter of the selected Encoder. Writing to EncoderValue is typically used to set the start value of the position counter. |

related to the usage if quadrature encoders.

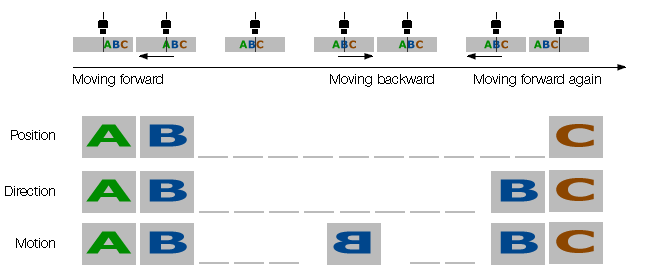

The following figure explains the different EncoderOutputModes :

Additionally, the Encoder is also available as TriggerSource and as an EventSource.

A description of incremental encoder's principle can be found in the use case Processing triggers from an incremental encoder.

Sequencer Control

Sequencer overview

The purpose of a sequencer is to allow the user of a camera to define a series of feature sets for image acquisition which can consecutively be activated during the acquisition by the camera. Accordingly, the proposed sequence is configured by a list of parameter sets. Each of these sequencer sets contains the settings for a number of camera features. Similar to user sets, the actual settings of the camera are overwritten when one of these sequencer sets is loaded. The order in which the features are applied to the camera depends on the design of the vendor. It is recommended to apply all the image related settings to the camera, before the first frame of this sequence is captured. The sequencer sets can be loaded and saved by selecting them using SequencerSetSelector. The Execution of the sequencer is completely controlled by the device.

(quoted from the GenICam SFNC 2.3)

Configuration of a sequencer set

The index of the adjustable sequencer set is given by the SequencerSetSelector. The number of available sequencer sets is directly given by the range of this feature. The features which are actually part of a sequencer set are defined by the camera manufacturer. These features can be read by SequencerFeatureSelector and activated by SequencerFeatureEnable[SequencerFeatureSelector]. This configuration is the same for all Sequencer Sets. To configure a sequencer set the camera has to be switched into configuration mode by SequencerConfigurationMode. Then the user has to select the desired sequencer set he wants to modify with the SequencerSetSelector. After the user has changed all the needed camera settings it is possible to store all these settings within a selected sequencer set by executing SequencerSetSave[SequencerSetSelector]. The user can also read back these settings by executing SequencerSetLoad[SequencerSetSelector]. To permit a flexible usage, more than one possibility to go from one sequencer set to another can exist. Such a path is selected by SequencerPathSelector[SequencerSetSelector]. Each path and therefore the transition between different sequencer sets is based on a defined trigger and an aimed next sequencer set which is selectable by SequencerSetNext[SequencerSetSelector][SequencerPathSelector]. After the trigger occurs the settings of the next set are active. The trigger is defined by the features SequencerTriggerSource[SequencerSetSelector][SequencerPathSelector] and SequencerTriggerActivation[SequencerSetSelector][SequencerPathSelector]. The functions of these features are the same as TriggerSource and TriggerActivation. For a flexible sequencer implementation, the SequencerPathSelector[SequencerSetSelector] should be part of the sequencer sets.

(quoted from the GenICam SFNC 2.3)

The "Sequencer Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| SequencerMode | sequencerMode | Controls if the sequencer mechanism is active. Possible values are:

|

| SequencerConfigurationMode | sequencerConfigurationMode | Controls if the sequencer configuration mode is active. Possible values are:

|

| SequencerFeatureSelector | sequencerFeatureSelector | Selects which sequencer features to control. The feature lists all the features that can be part of a device sequencer set. All the device's sequencer sets have the same features. Note that the name used in the enumeration must match exactly the device's feature name. |

| SequencerFeatureEnable[SequencerFeatureSelector] | sequencerFeatureEnable | Enables the selected feature and make it active in all the sequencer sets. |

| SequencerSetSelector | sequencerSetSelector | Selects the sequencer set to which further settings applies. |

| SequencerSetSave | sequencerSetSave | Saves the current device state to the selected sequencer set selected by SequencerSetSelector. |

| SequencerSetLoad | sequencerSetLoad | Loads the sequencer set selected by SequencerSetSelector in the device. Even if SequencerMode is Off, this will change the device state to the configuration of the selected set. |

| SequencerSetActive | sequencerSetActive | Contains the currently active sequencer set. |

| SequencerSetStart | sequencerSetStart | Sets the initial/start sequencer set, which is the first set used within a sequencer. |

| SequencerPathSelector[SequencerSetSelector] | sequencerPathSelector | Selects to which branching path further path settings apply. |

| SequencerSetNext | sequencerSetNext | Select the next sequencer set. |

| SequencerTriggerSource | sequencerTriggerSource | Specifies the internal signal or physical input line to use as the sequencer trigger source. Values supported by Balluff/MATRIX VISION devices are:

Other possible values that might be supported by third party devices are:

|

| SequencerTriggerActivation | sequencerTriggerActivation | Specifies the activation mode of the sequencer trigger. Supported values for UserOutput0 are:

|

The sequencer mode can be used to set a series of feature sets for image acquisition. The sets can consecutively be activated during the acquisition by the camera. The sequence is configured by a list of parameters sets.

- Note

- At the moment, the Sequencer Mode is only available for Balluff/MATRIX VISION cameras with CCD sensors and Sony's CMOS sensors. Please consult the "Device Feature and Property List"s to get a summary of the actually supported features of each sensor.

The following features are currently available for using them inside the sequencer control:

| Feature | Note | Changeable during runtime |

| BinningHorizontal | - | |

| BinningVertical | - | |

| CounterDuration | Can be used to configure a certain set of sequencer parameters to be applied for the next CounterDuration frames. | since Firmware version 2.15 |

| DecimationHorizontal | - | |

| DecimationVertical | - | |

| ExposureTime | since Firmware version 2.15 | |

| Gain | since Firmware version 2.15 | |

| Height | since Firmware version 2.36 | |

| OffsetX | since Firmware version 2.35 | |

| OffsetY | since Firmware version 2.35 | |

| Width | since Firmware version 2.36 | |

| mvUserOutput | - | |

| UserOutputValueAll | - | |

| UserOutputValueAllMask | - | |

| Multiple conditional sequencer paths | - |

- Note

- Configured sequencer programs are stored as part of the User Sets like any other feature.

Actual settings of the camera are overwritten when a sequencer set is loaded.

- See also

- Define multiple exposure times using the Sequencer Control

- There are 3 C++ examples called GenICamSequencerUsage, GenICamSequencerUsageWithPaths and GenICamSequencerParameterChangeAtRuntime that show how to control the sequencer from an application. They can be found in the

Examplessection of the Impact Acquire C++ API

Transport Layer Control

The "Transport Layer Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| PayloadSize | payloadSize | Provides the number of bytes transferred for each image or chunk on the stream channel. |

| GevInterfaceSelector | gevInterfaceSelector | Selects which physical network interface to control. |

| GevMACAddress[GevInterfaceSelector] | gevMACAddress | MAC address of the network interface. |

| GevStreamChannelSelector | gevStreamChannelSelector | Selects the stream channel to control. |

| PtpControl | ptpEnable | Enables the Precision Time Protocol (PTP). |

| etc. |

related to the Transport Layer Control.

Use cases related to the "Transport Layer Control" are:

User Set Control

The "User Set Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| UserSetSelector | userSetSelector | Selects the feature user set to load, save or configure. |

| UserSetLoad[UserSetSelector] | userSetLoad | Loads the user set specified by UserSetSelector to the device and makes it active. |

| UserSetSave[UserSetSelector] | userSetSave | Endianess of the device registers. |

| UserSetDefault | userSetDefault | Selects the feature user set to load and make active when the device is reset. |

related to the User Set Control to save and load the user device settings.

The camera allows the storage of up to four configuration sets in the camera. This feature is similar to storing settings in the registry but this way in the camera. It is possible to store

- exposure,

- gain,

- AOI,

- frame rate,

- LUT,

- one Flat-Field Correction

- etc.

permanently. You can select, which user set comes up after hard reset.

Use case related to the "User Set Control" is:

Another way to create user data is described here: Creating user data entries

Action Control

The "Action Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| ActionDeviceKey | actionDeviceKey | Provides the device key that allows the device to check the validity of action commands. The device internal assertion of an action signal is only authorized if the ActionDeviceKey and the action device key value in the protocol message are equal. |

| ActionSelector | actionSelector | Selects to which action signal further action settings apply. |

| ActionGroupKey | actionGroupKey | Provides the key that the device will use to validate the action on reception of the action protocol message. |

| ActionGroupMask | actionGroupMask | Provides the mask that the device will use to validate the action on reception of the action protocol message. |

related to the Action control features.

The use case Using Action Commands shows in detail how this feature works.

mv Logic Gate Control

The "mv Logic Gate Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| mvLogicGateANDSelector | Selects the AND gate to configure. | |

| mvLogicGateANDSource1 | Selects the first input signal of the AND gate selected by mvLogicGateANDSelector. | |

| mvLogicGateANDSource2 | Selects the second input signal of the AND gate selected by mvLogicGateANDSelector. | |

| mvLogicGateORSelector | Selects the OR gate to configure. | |

| mvLogicGateORSource1 | Selects the first input signal of the OR gate selected by mvLogicGateORSelector. | |

| mvLogicGateORSource2 | Selects the second input signal of the OR gate selected by mvLogicGateORSelector. | |

| mvLogicGateORSource3 | Selects the third input signal of the OR gate selected by mvLogicGateORSelector. | |

| mvLogicGateORSource4 | Selects the fourth input signal of the OR gate selected by mvLogicGateORSelector. |

related to control the devices Logic Gate Control parameters. It performs a logical operation on one or more logic inputs and produces a single logic output.

The use case Creating different exposure times for consecutive images shows how you can create different exposure times with timers, counters and the logic gate functionality.

mv FFC Control

The "mv FFC Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| mvFFCEnable | Enables the flat field correction. | |

| mvFFCCalibrationImageCount | The number of images to use for the calculation of the correction image. | |

| mvFFCCalibrate | Starts the calibration of the flat field correction. |

related to control the devices Flat Field Correction parameters.

The use case Flat-Field Correction shows how this control can be used.

mv Serial Interface Control

The "mv Serial Interface Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| mvSerialInterfaceMode | States the interface mode of the serial interface. | |

| mvSerialInterfaceEnable | Controls whether the serial interface is enabled or not. | |

| mvSerialInterfaceBaudRate | Serial interface clock frequency. | |

| mvSerialInterfaceASCIIBuffer | Buffer for exchanging ASCII data over serial interface. | |

| mvSerialInterfaceWrite | Command to write data from the serial interface. | |

| mvSerialInterfaceRead | Command to read data from the serial interface. | |

| etc. |

related to control the devices Serial Interface Control parameters. It enables the camera to be controlled via serial interface.

The use case Working With The Serial Interface (mv Serial Interface Control) shows how you can work with the serial interface control.

mv Defective Pixel Correction Control

The "mv Defective Pixel Correction Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| mvDefectivePixelCount | Contains the number of valid defective pixels. | |

| mvDefectivePixelSelector | Controls the index of the defective pixel to access. | |

| mvDefectivePixelDataLoad | Loads the defective pixels from the device non volatile memory. | |

| mvDefectivePixelDataSave | Saves the defective pixels to the device non volatile memory. |

related to control the devices Defective Pixel data.

mv Frame Average Control (only with specific models)

The "mv Frame Average Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| mvFrameAverageEnable | Enables the frame averaging engine. | |

| mvFrameAverageSlope | The slope in full range of register. |

related to the frame averaging engine.

The use case Reducing noise by frame averaging shows in detail how this feature works.

mv Auto Feature Control

The "mv Auto Feature Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| mvAutoFeatureAOIMode | Common AutoControl AOI used for Auto Gain Control(AGC), Auto Exposure Control(AEC) and Auto White Balancing. | |

| mvAutoFeatureSensitivity | The controllers sensitivity of brightness deviations. This parameter influences the gain as well as the exposure controller. | |

| mvAutoFeatureCharacteristic | Selects the prioritization between Auto Exposure Control(AEC) and Auto Gain Control(AGC) controller. | |

| mvAutoFeatureBrightnessTolerance | The error input hysteresis width of the controller. If the brightness error exceeds the half of the value in positive or negative direction, the controller restarts to control the brightness. |

related to the automatic control of exposure, gain, and white balance.

With this control you can influence the characteristic of the controller depending on certain light situations. AEC/AGC can be controlled with the new additional properties mvAutoFeatureSensitivity, mvAutoFeatureCharacteristic and mvAutoFeatureBrightnessTolerance.

mv High Dynamic Range Control (only with specific sensor models)

The "mv High Dynamic Range Control" contains features like

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| mvHDREnable | Enables the high dynamic range feature. | |

| mvHDRPreset | Selects the HDR parameter set. | |

| mvHDRSelector | Selects the HDR parameter set to configure. | |

| mvHDRVoltage1 | First HDR voltage in mV. | |

| mvHDRVoltage2 | Second HDR voltage in mV. | |

| mvHDRExposure1 | First HDR exposure in ppm. | |

| mvHDRExposure2 | Second HDR exposure in ppm. |

related to the control of the device High Dynamic Range parameters.

The use cases Adjusting sensor of camera models with onsemi MT9V034 and Adjusting sensor of camera models with onsemi MT9M034 show the principle of the HDR.

LUT Control

The "LUT Control" contains features like

- Since

- FW Revision 2.35.2054.0

A 12 to 9 RawLUT for color cameras was added. The RawLUT works identically for all colors but with a higher resolution. This is useful if higher dynamic is needed.

| Feature name (acc. to SFNC) | Property name (acc. to Impact Acquire) | Description |

| LUTSelector | LUTSelector | Selects which LUT to control. |

| LUTEnable[LUTSelector] | LUTEnable | Activates the selected LUT. |

| LUTIndex[LUTSelector] | LUTIndex | Controls the index (offset) of the coefficient to access in the selected LUT. |

| LUTValue[LUTSelector][LUTIndex] | LUTValue | Returns the value at entry LUTIndex of the LUT selected by LUTSelector. |

| LUTValueAll[LUTSelector] | LUTValueAll | Allows access to all the LUT coefficients with a single read/write operation. |

| mvLUTType | Describes which type of LUT is used for the current LUTSelector | |

| mvLUTInputData | Describes the data the LUT is applied to (e.g bayer, RGB, or gray data) | |

| mvLUTMapping | Describes the LUT mapping (e.g. 10 bit → 12 bit) |

related to the look-up table (LUT) control.

The look-up table (LUT) is a part of the signal path in the camera and maps data of the ADC into signal values. The LUT can be used e.g. for:

- High precision gamma

- Non linear enhancement (e.g. S-Shaped)

- Inversion (default)

- Negative offset

- Threshold

- Level windows

- Binarization

This saves (approx. 5%) CPU load, works on the fly in the FPGA of camera, is less noisy and there are no missing codes after Gamma stretching.

- See also

- Working with LUTValueAll

Implementing a hardware-based binarization

Optimizing the color/luminance fidelity of the camera

Three read-only registers describe the LUT that is selected using the LUTSelector register:

mvLUTType

There are two different types of LUTs available in Balluff/MATRIX VISION cameras:

- Direct LUTs define a mapping for each possible input value, for example a 12 → 10 bit direct LUT has 2^12 entries and each entry has 10 bit.

- Interpolated LUTs do not define a mapping for every possible input value, instead the user defines an output value for equidistant nodes. In between the nodes linear interpolation is used to calculate the correct output value.

Considering a 10 → 10 bit interpolated LUT with 256 nodes (as usually used in Balluff/MATRIX VISION cameras), the user defines a 10 bit output value for 256 equidistant nodes beginning at input value 0, 4, 8, 12, 16 and so on. For input values in between the nodes linear interpolation is used.

mvLUTInputData

This register describes on which data the LUT is applied to:

- Bayer means that the LUT is applied to raw Bayer data, thus (depending on the de-Bayer algorithm) a manipulation of one pixel may also affect other pixels in its neighborhood.

- Gray means that the LUT is applied to gray data.

- RGB means that the LUT is applied to RGB data (i.e. after de-Bayering). Normally this is used to change the luminance on an RGB image and the LUT is applied to all three channels.

mvLUTMapping

This register describes the mapping of the currently selected LUT, e.g "map_10To10" means that a 10 bit input value is mapped to a 10 bit output values whereas "map_12To10" means that a 12 bit input value is mapped to a 10 bit output value.

LUT support in Balluff/MATRIX VISION cameras

| mvBlueCOUGAR | LUTSelector | LUT type | LUT mapping | LUT input data |

| -X100wG -X100wG-POE -X102bG -X102bG-POE -X102bG-POEI -X102dG -X102dG-POE -X102dG-POEI -X102eG -X102eGE -X104eG -X104eG-POE -X104eG-PLC -X102eG-POE -X102eGE-POE -X102eG-POEI -X102eGE-POEI -X105G -X120aG -X120aG-POE -X120bG -X120bG-POE -X120bG-PLC -X120dG -X120dG-POE -X120dG-POEI -X122G -X122G-POE -X122G-PLC -X122G-POEI -X123G -X123G-PLC -X123G-POE -X123G-POEI -X124G -X124G-POE -X1010G -X1010G-POE -X225G-POEI -X225G -X225G-PLC -X225G-1211-ET | Luminance | Direct | map_10To10 | Gray |

| -X100wC -X100wC-POE -X102bC -X102bC-POE -X102bC-POEI -X102dC -X102dC-POE -X102dC-POEI -X102eC -X104eC -X104eC-POE -X104eC-PLC -X102eC-POE -X102eC-POEI -X105C -X120aC -X120aC-POE -X120bC -X120bC-POE -X120bC-PLC -X120dC -X120dC-POE -X120dC-POEI -X122C -X122C-POE -X122C-PLC -X123C -X123C-PLC -X123C-POE -X123C-POEI -X124C -X124C-POE -X1010C -X1010C-POE -X225C-POEI -X225C | Luminance Red Green Blue | Interpolated Direct Direct Direct | map_10To10 map_8To10 map_8To10 map_8To10 | RGB Bayer Bayer Bayer |

| -X104G -X104G-POEI -X104aG -X104a12G -X104bG -X104bG-POE -X125aG -X125aG-POE -X125aG-POEI | Luminance | Direct | map_12To10 | Gray |

| -X104C -X104C-POE -X104aC -X104a12C -X104bC -X104bC-POE -X125aC -X125aC-POE -X125aC-POEI | Red Green Blue | Direct Direct Direct | map_10To10 map_10To10 map_10To10 | Bayer Bayer Bayer |

| -X100fG -X100fG-POE -X100fG-POEI -X100sG -X100sG-POE -X100sG-POEI -X101sG -X101sG-POE -X101sG-POEI -X102fG -X102fG-POE -X102fG-POEI -X102kG -X102kG-POE -X102kG-POEI -X102mG -X102mG-POE -X102mG-POEI -X102nG -X102nG-POE -X102nG-POEI -X104fG -X104fG-POE -X104fG-POEI -X104iG -X104iG-POE -X104iG-POEI -X105bG -X105bG-POE -X105bG-POEI -X105dG -X105dG-POE -X105dG-POEI -X105pG -X105pG-POE -X105pG-POEI -X105sG -X105sG-POE -X105sG-POEI -X106G -X106G-POE -X106G-POEI -X107bG -X107bG-POE -X107bG-POEI -X108aG -X108aG-POE -X108aG-POEI -X108uG -X108uG-POE -X108uG-POEI -X109bG -X109bG-POE -X109bG-POEI -X1012bG -X1012bG-POE -X1012bG-POEI -X1012dG -X1012dG-POE -X1012dG-POEI -X1012rG -X1012rG-POE -X1012rG-POEI -X1020G -X1020G-POE -X1020G-POEI | Luminance | Direct | map_12To9 | Gray |

| -X100fC -X100fC-POE -X100fC-POEI -X102fC -X102fC-POE -X102fC-POEI -X102kC -X102kC-POE -X102kC-POEI -X102mC -X102mC-POE -X102mC-POEI -X102nC -X102nC-POE -X102nC-POEI -X104fC -X104fC-POE -X104fC-POEI -X104iC -X104iC-POE -X104iC-POEI -X105bC -X105bC-POE -X105bC-POEI -X105dC -X105dC-POE -X105dC-POEI -X105pC -X105pC-POE -X105pC-POEI -X106C -X106C-POE -X106C-POEI -X107bC -X107bC-POE -X107bC-POEI -X108aC -X108aC-POE -X108aC-POEI -X109bC -X109bC-POE -X109bC-POEI -X1012bC -X1012bC-POE -X1012bC-POEI -X1012dC -X1012dC-POE -X1012dC-POEI -X1012rC -X1012rC-POE -X1012rC-POEI -X1020C -X1020C-POE -X1020C-POEI | Red Green Blue mvRaw | Direct Direct Direct Direct | map_10To9 map_10To9 map_10To9 map_12To9 | Bayer Bayer Bayer Bayer |

| -X2012bG -X2012bG-POE -X2012bG-POEI | Luminance | Direct | map_12To9 | Gray |

| -X2012bC -X2012bC-POE -X2012bC-POEI | Red Green Blue mvRaw | Direct Direct Direct Direct | map_10To9 map_10To9 map_10To9 map_12To9 | Bayer Bayer Bayer Bayer |

| -XD124aG -XD126G -XD129G -XD124bG -XD126aG -XD129aG -XD204G -XD204aG -XD204bG -XD204baG -XD104G -XD104aG -XD104a12G -XD104bG -XD104baG -XD1212aG | Luminance | Direct | map_10To12 | Gray |

| -XD124aC -XD126C -XD129C -XD124bC -XD126aC -XD129aC -XD204C -XD204aC -XD204bC -XD204baC -XD104C -XD104aC -XD104bC -XD104baC -XD1212aC | Luminance Red Green Blue | Interpolated Direct Direct Direct | map_10To10 map_8To12 map_8To12 map_8To12 | RGB Bayer Bayer Bayer |

| -XD102mG -XD104dG -XD105aG -XD105dG -XD104hG -XD107G -XD107bG -XD108aG -XD109bG -XD1012bG -XD1012dG -XD1016G -XD1020aG -XD1025G -XD1031G | Luminance | Direct | map_12To9 | Gray |

| -XD104dC -XD105aC -XD104hC -XD105dC -XD107C -XD107bC -XD108aC -XD109bC -XD1012bC -XD1012dC -XD1016C -XD1020aC -XD1025C -XD1031C | Luminance Red Green Blue mvRaw | Interpolated Direct Direct Direct Direct | map_10To10 map_10To9 map_10To9 map_10To9 map_12To9 | RGB Bayer Bayer Bayer Bayer |

| mvBlueCOUGAR-3X120aG mvBlueCOUGAR-3X120aG-POEI | - | - | - | - |

| mvBlueCOUGAR-3X123G | Red(1st head) Green(2nd head) Blue(3rd head) | Interpolated Interpolated Interpolated | map_12To12 map_12To12 map_12To12 | Gray Gray Gray |

| mvBlueCOUGAR-3X123C | Red(1st head) Green(2nd head) Blue(3rd head) | Interpolated Interpolated Interpolated | map_12To12 map_12To12 map_12To12 | Bayer Bayer Bayer |