General

| CPU | Arm Cortex-A78AE v8.2 @ 2.2GHz |

| Cores | 12 |

| RAM | 32GB |

| USB2.0 Interfaces | 4 |

| USB3.2 Interfaces | 3 |

| Ethernet | 1x1 GbE + 1x10 GbE |

| PCIe | 2x8 + 1x4 + 2x1 Gen 4.0 |

- Note

- The above table describes the specification of the NVIDIA Jetson™ AGX Orin Developer Kit.

-

The following tests were conducted on JetPack 5.0.2 with power mode

MAXN.

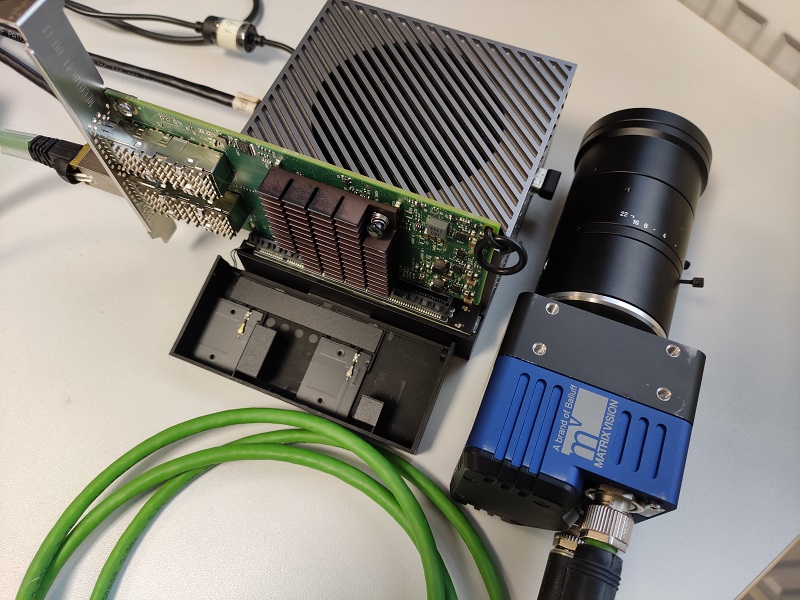

Test Setup

- Note

- The

Mellanox ConnectX-4network card is used in this test.

Additional Settings

Set CPU and EMC clocks to Maximum

It is important to set the CPU and EMC clocks to maximum to ensure reliable data transmission. To do so, call jetson_clocks with sudo credentials via CLI:

$ sudo jetson_clocks

- Note

- This above command line works only temporarily. The clocks will be reset after the next system boot. A systemd service can be used to run the command line permanently at every system boot.

Turn on RX adaptive interrupt moderation

Check if the adaptive moderation for the receive path (i.e. Adaptive RX) has already been enabled:

$ ethtool -c eth<x>

If not, turn it on:

$ sudo ethtool -C eth<x> adaptive-rx on

- Note

- This only works if the network card supports the adaptive interrupt moderation.

- If the adaptive interrupt moderation is not enabled by default, after executing the above command, the clock will still be reset after the next system boot.

Increase RX/TX ring size

Query the maximum and current RX/TX ring size by calling:

$ ethtool -g eth<x>

Set the RX/TX ring size to maximum (e.g. 8192):

$ sudo ethtool -G eth<x> rx 8192 tx 8192

- Note

- After executing the above command, the clock will still be reset after the next system boot.

Increase the socket buffer size

It is recommended to increase the network socket receive and send buffer size to 16MB. These values can be set permanently in /etc/sysctl.d/62-buffers-performance.conf:

| Setting | Value | Description |

| net.core.wmem_max | 16777216 | Maximum memory size of a socket buffer for sending in Bytes |

| net.core.rmem_max | 16777216 | Maximum memory size of a socket buffer for receiving in Bytes |

| net.core.netdev_max_backlog | 10000 | Maximum number of packets which can be buffered if the Kernel does not manage to process them as fast as they are received |

| net.ipv4.udp_mem | 10240, 87380, 16777216 | Minimum, Default and Maximum memory size of a UDP socket buffer for receiving and sending data in bytes |

- Note

- If

/etc/sysctl.d/62-buffers-performance.confdoesn't exist beforehand, create one. - Refer to Network Performance Settings for more information.

Increase MTU

If possible, it is recommended to increase the MTU of the network card to at least 8000 bytes to enable the use of jumbo frames.

| Setting | Value |

| MTU | 8000 Byte |

Please refer to Network Performance Settings to learn about how to set this value temporarily or permanently.

Manually distribute tasks for CPU cores

If a CPU core happens to receive interrupts and process image data at the same, it becomes overloaded due to the large amount of image data from 10GigE transmission, which leads to lost and incomplete frames. In order to avoid this, tasks can be assigned to different CPU cores manually.

Direct interrupts to one CPU core

There are two ways to define which CPU cores shall receive network packet interrupts.

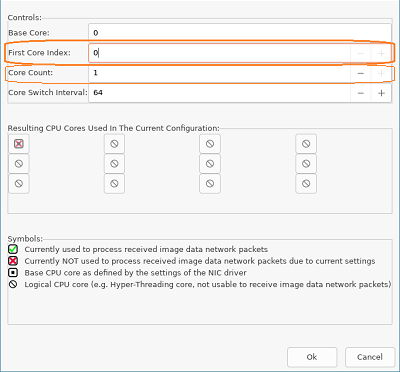

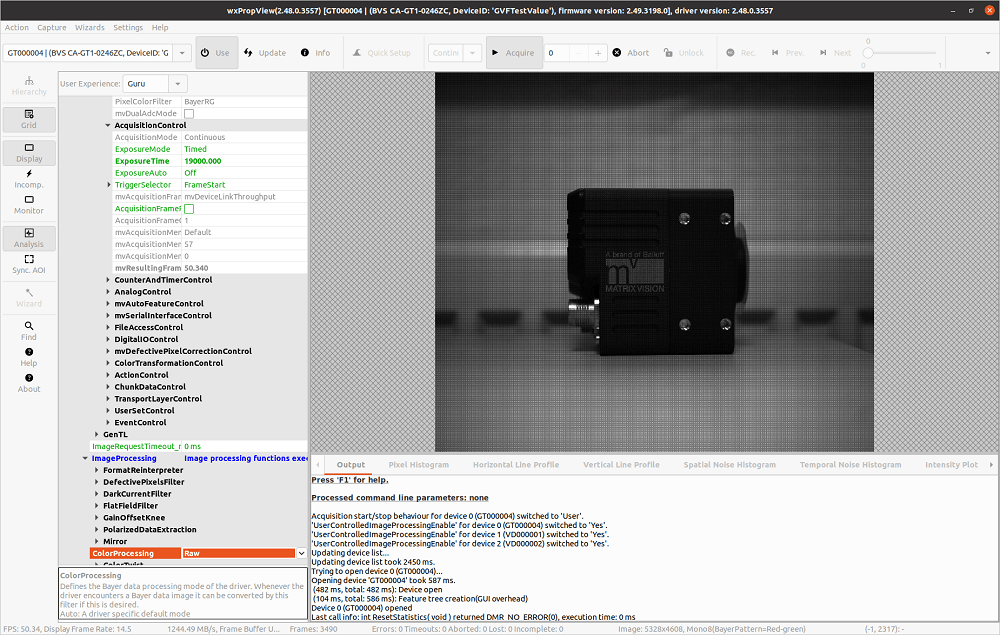

The first option is to use the Multi-Core Acquisition Optimizer. To do so, leave the RSS channels as default (e.g. 12 channels at power mode MAXN), set the Core Count and the First Core Index. For example, setting Core Count to 1 and First Core Index to 0 will make the first CPU core receive all the network packet interrupts. This can be achieved either via the Multi-Core Acquisition Optimizer Wizard (as shown below) or by using the corresponding API functions of Impact Acquire:

- Note

- On Linux, the

Mellanox ConnectXfamily network card works with this option when at least version 2.48.0 of Impact Acquire is installed.

The second option is to limit the RSS channels to the first queue, so only the first CPU core will be used to receive interrupts:

$ sudo ethtool -L eth<x> combined 1

Set CPU affinity of the user application

In order not to run the user application on the CPU cores dedicated for receiving network packet interrupts, CPU affinity mask of the user application can be set via taskset. For instance, if the first CPU core will be or has been assigned to receive interrupts as described above, run the user application on the other 11 CPU cores (if in MAXN power mode) via:

$ taskset ffe <user_application>

Benchmarks

Scenarios that have been tested are listed as follows:

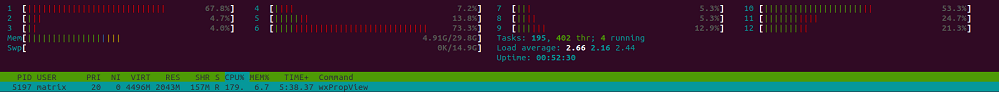

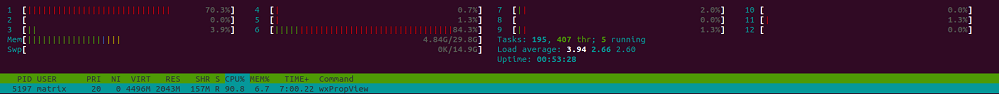

- When de-Bayering is carried out on the camera: The camera delivers RGB8 image data to the host system. This setting results in a lower CPU load but a lower frame rate.

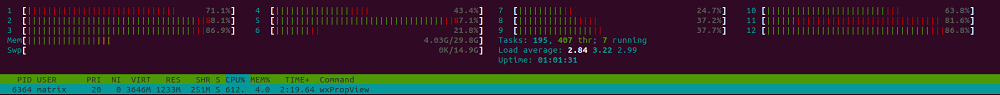

- When de-Bayering is carried out on the host system: The camera delivers Bayer8 image data to the host system. The Bayer8 image data then get de-Bayered to RGB8 format on the host system. This setting results in a higher frame rate but a higher CPU load as well.

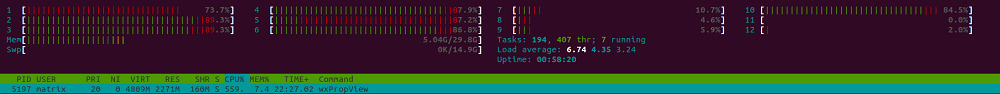

- When no de-Bayering is performed: The camera delivers Bayer8 image data to the host system. No de-Bayering is performed. This settings results in a lower CPU load and a higher frame rate. The behavior is identical to monochrome cameras.

| Camera | Resolution | Pixel Format | Frame Rate [Frames/s] | Bandwidth [MB/s] | CPU Load With Image Display | CPU Load Without Image Display |

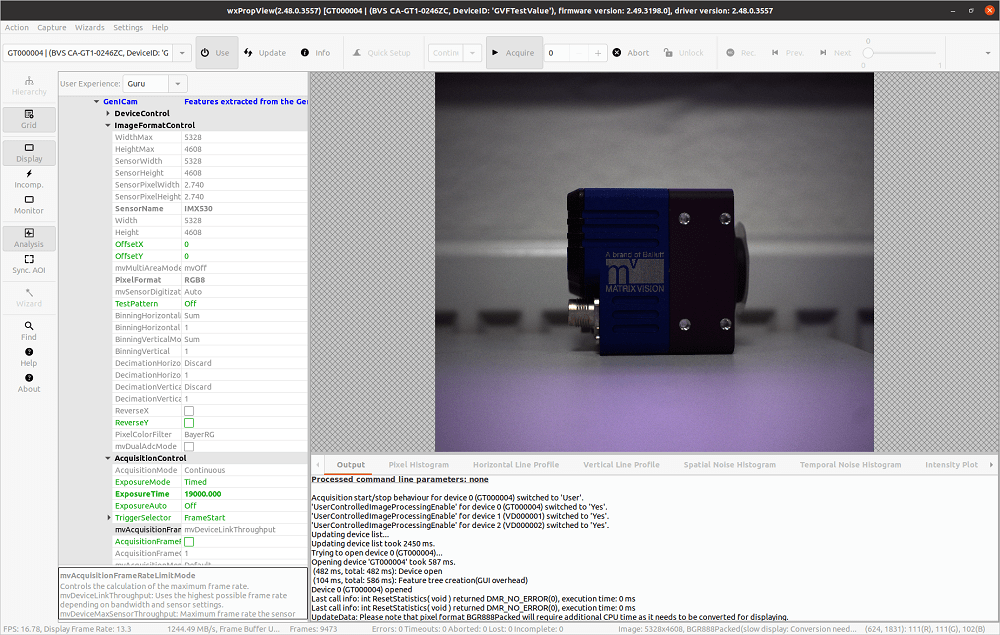

| CA-GT1-0246ZC | 5328 x 4608 | RGB8 (on camera) → RGB8 (on host) | 16.78 | 1244.49 | ~24% | ~15% |

| ||||||

| ||||||

| ||||||

| Camera | Resolution | Pixel Format | Frame Rate [Frames/s] | Bandwidth [MB/s] | CPU Load With Image Display | CPU Load Without Image Display |

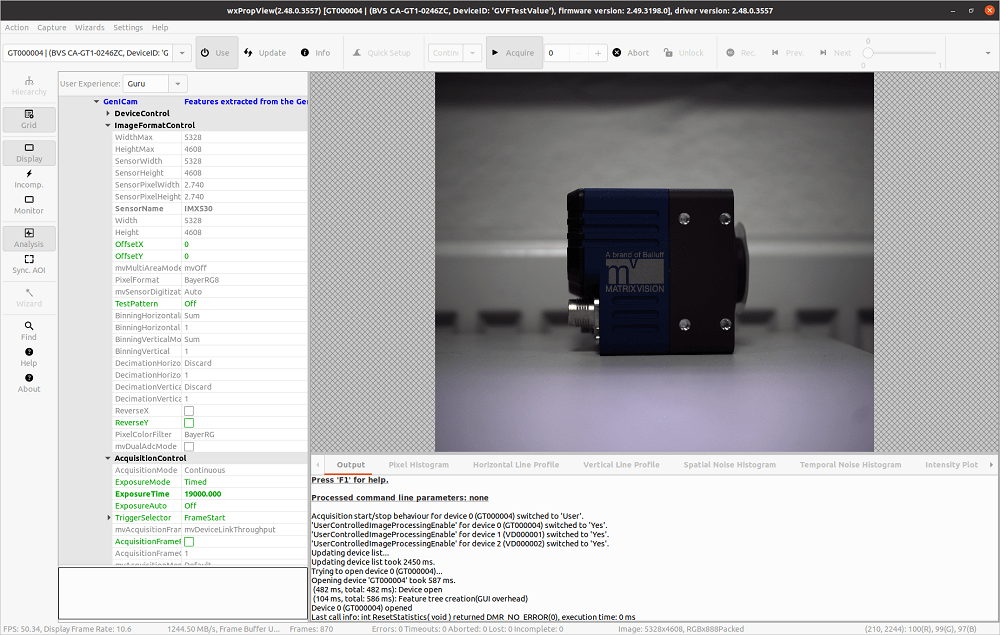

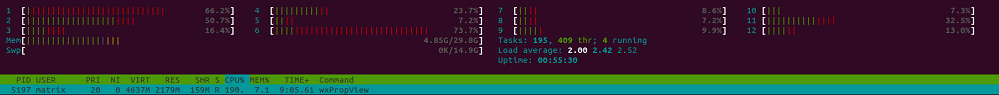

| CA-GT1-0246ZC | 5328 x 4608 | BayerRG8 (on camera) → RGB8 (on host) | 50.34 | 1244.50 | ~61% | ~52% |

| ||||||

| ||||||

| ||||||

| Camera | Resolution | Pixel Format | Frame Rate [Frames/s] | Bandwidth [MB/s] | CPU Load With Image Display | CPU Load Without Image Display |

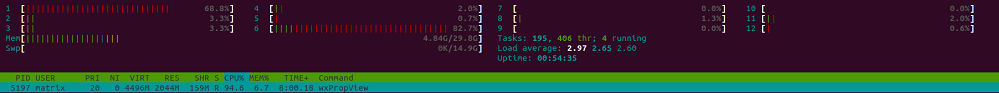

| CA-GT1-0246ZC | 5328 x 4608 | BayerRG8 (on camera) → BayerRG8/Raw (on host) | 50.34 | 1244.49 | ~26% | ~14% |

| ||||||

| ||||||

| ||||||